The future of democracy isn’t just being decided in Washington—it’s unfolding in your city council chambers, school board meetings, and local planning commissions. As artificial intelligence becomes increasingly sophisticated, municipalities across America are deploying AI tools to manage everything from traffic patterns to public feedback. This transformation raises a critical question: Will AI in local democracy empower citizens or silence their authentic voices?

The integration of AI in local democracy represents both an unprecedented opportunity for civic efficiency and a potential threat to the foundational principle of representative government—that every voice matters. While AI can help process massive amounts of data and streamline bureaucratic processes, it can also be weaponized to flood public comment systems with artificially generated feedback, drowning out genuine community concerns.

The Automation Promise: Where AI Helps Local Government

AI in local democracy isn’t inherently threatening—in fact, when deployed responsibly, it offers remarkable benefits for both government efficiency and citizen engagement.

Streamlining Administrative Burdens

Local governments are drowning in paperwork. City councils process hundreds of pages of meeting minutes, budget documents, and public comments monthly. AI-powered tools can summarize these documents in minutes, allowing elected officials to focus on decision-making rather than documentation review. This application of AI in local democracy saves taxpayer money while accelerating government responsiveness.

Optimizing Public Services

Smart cities are leveraging AI to improve daily life for residents. Traffic management systems use machine learning to reduce congestion, potentially cutting commute times by 15-20%. Predictive maintenance algorithms identify infrastructure problems before they become emergencies—catching water main weaknesses before they burst or flagging potholes before they damage vehicles.

Enhancing Accessibility

AI translation tools are breaking down language barriers in diverse communities. Real-time transcription and translation services allow non-English speakers to participate fully in public meetings. This inclusive approach to AI in local democracy ensures that civic engagement isn’t limited by linguistic divides.

Detecting Fraud and Waste

Machine learning algorithms can identify anomalies in procurement processes, flagging potential fraud in vendor contracts or budget irregularities that human auditors might miss. This watchdog function of AI in local democracy protects public resources and builds trust in government spending.

The ‘Bot Flood’: Generative AI and the Threat to Public Comment

The same technology that streamlines government operations also creates unprecedented opportunities for manipulation. The dark side of AI in local democracy is already emerging in public comment systems nationwide.

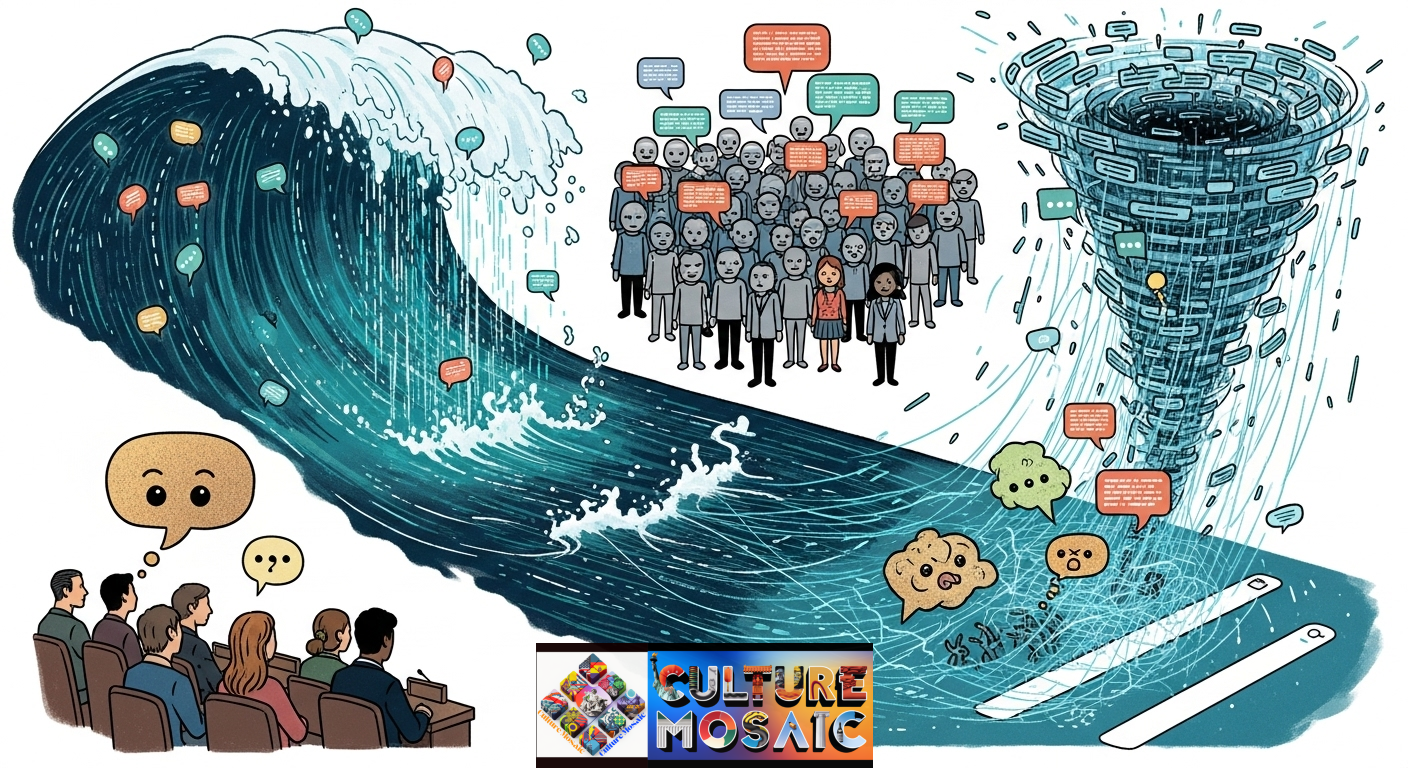

The Authenticity Crisis

Imagine a contentious zoning decision in your community. Developers want to build a high-density apartment complex in a residential neighborhood. Historically, interested parties would attend town hall meetings or submit written comments—each representing real human concern. Today, a single individual with access to ChatGPT or similar tools can generate thousands of unique, convincing public comments in minutes.

These AI-generated submissions aren’t obvious copy-paste jobs. They vary in tone, style, and specific arguments while pushing the same agenda. Local officials face an impossible task: distinguishing between authentic community sentiment and artificially manufactured consensus.

Real-World Examples

This isn’t hypothetical. In 2023, several city councils reported suspicious patterns in public comment submissions—hundreds of letters with similar talking points but different wording, all submitted within hours. While difficult to prove definitively, civic technology experts believe these represent early instances of generative AI being weaponized against AI in local democracy principles.

The Scaling Problem

Traditional astroturfing (fake grassroots movements) required significant resources—people to write letters, make phone calls, or attend meetings. Generative AI eliminates these constraints. A well-funded interest group or even a motivated individual can now simulate the appearance of massive public support or opposition at virtually no cost.

Undermining Civic Trust

When citizens suspect that public comment processes are compromised, they disengage. If your genuine concerns might be lost in a sea of AI-generated noise, why bother participating? This erosion of trust represents the most insidious threat of unregulated AI in local democracy—it transforms civic participation from a meaningful act into a seemingly futile gesture.

The Fairness Issue: Algorithmic Bias in Your City

Beyond manipulation concerns, AI in local democracy faces a fundamental challenge: algorithms reflect the biases embedded in their training data. When cities deploy AI for decision-making without careful oversight, they risk automating historical injustices.

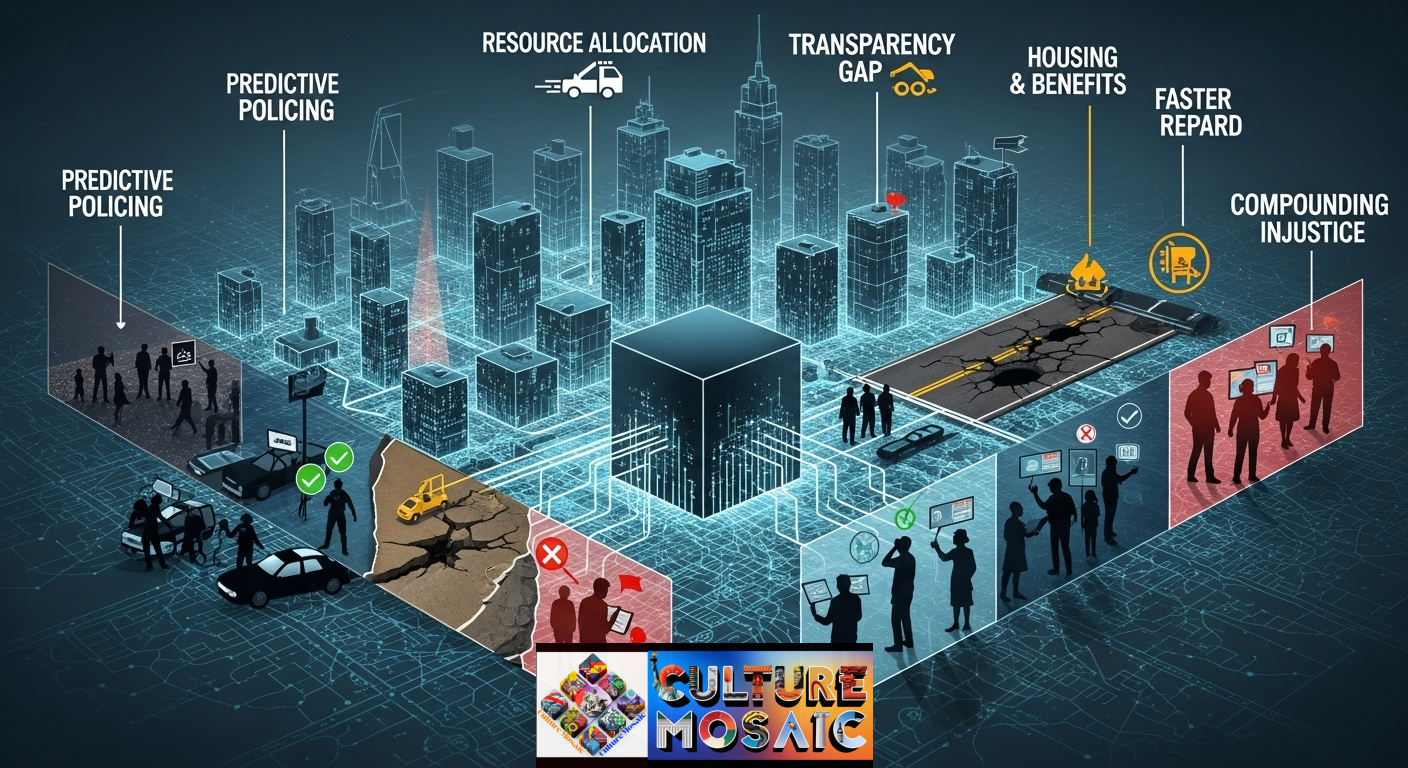

Predictive Policing Problems

Many cities use AI to predict crime hotspots, directing police resources to neighborhoods deemed “high-risk.” However, these predictions often rely on historical arrest data, which reflects past policing practices, not actual crime rates. If police have historically over-patrolled minority neighborhoods, the AI learns to recommend more policing in those same areas, creating a feedback loop that perpetuates racial profiling.

Resource Allocation Inequities

AI systems that prioritize infrastructure repairs, allocate social services, or determine code enforcement priorities can embed socioeconomic bias. If an algorithm learns that wealthy neighborhoods receive faster response times for pothole repairs (due to more complaints or political influence), it may recommend continuing that pattern, further disadvantaging under-resourced communities.

Housing and Benefits Screening

Some municipalities use AI to screen applications for public housing or social benefits, aiming to reduce processing times. However, these systems may inadvertently discriminate based on factors correlated with race or class. An algorithm might flag applicants with non-traditional work histories or housing instability—factors that disproportionately affect vulnerable populations—as “high-risk.”

The Transparency Gap

Perhaps most troubling, many citizens have no idea they’re being subjected to algorithmic decision-making. Unlike a human bureaucrat whose reasoning you can question, AI systems often operate as “black boxes.” This opacity violates fundamental principles of due process and accountability that should be central to AI in local democracy.

Compounding Historical Injustice

AI doesn’t create bias from nothing—it amplifies patterns in existing data. In communities with histories of redlining, discriminatory lending, or unequal service provision, deploying AI without addressing these root causes means automating discrimination at unprecedented scale and speed.

How to Demand AI Transparency: The Citizen Checklist

Protecting the integrity of AI in local democracy requires active, informed citizenship. Here’s your practical guide to holding local government accountable for AI deployment.

Attend City Council Meetings with Specific Questions

When your municipality proposes adopting AI systems, come prepared with these questions:

- Data Sources: “What data is training this AI system, and from what time period?”

- Bias Testing: “Has this algorithm been audited for racial, economic, or geographic bias?”

- Human Oversight: “What human review process exists before AI recommendations become actions?”

- Vendor Transparency: “Can we review the source code, or is this proprietary ‘black box’ technology?”

- Performance Metrics: “How will we measure whether this system is working fairly?”

Request AI Impact Assessments

Several progressive cities now require “algorithmic impact assessments” before deploying AI in local democracy contexts—similar to environmental impact statements. Push your local government to adopt this practice. These assessments should:

- Identify all stakeholders affected by the AI system

- Analyze potential disparate impacts on vulnerable populations

- Establish clear accountability mechanisms

- Include public comment periods before implementation

Support Verification Systems for Public Input

Advocate for robust identity verification in public comment systems. While this must balance privacy concerns, reasonable measures can help ensure that each comment represents an actual constituent. Options include:

- Requiring address verification for written comments

- Prioritizing in-person testimony at meetings

- Implementing multi-factor authentication for online submission portals

- Publishing aggregated demographic data about commenters (without identifying individuals)

Join or Form a Civic Technology Watchdog Group

You don’t need to be a tech expert. Citizens’ groups across the country are successfully monitoring AI in local democracy:

- Request public records about AI contracts and deployment

- Crowdsource observations about algorithmic decision patterns

- Connect with academic researchers studying algorithmic fairness

- Share findings with local media to increase public awareness

Know Your Rights Under Public Records Laws

Most states have sunshine laws that give you access to government records. File Freedom of Information Act (FOIA) requests for:

- Contracts with AI vendors

- Training materials for staff using AI systems

- Audit reports or evaluations of AI tool performance

- Communications between officials and AI companies

Even if your request is denied, the denial itself can reveal what your government is trying to keep hidden about AI in local democracy implementations.

Support Candidates Who Prioritize AI Accountability

Make algorithmic transparency an election issue. Ask candidates for local office:

- Will they support AI impact assessment requirements?

- Do they commit to human oversight of AI recommendations?

- Will they vote to ban AI-generated public comments?

- Do they support citizen access to information about AI systems affecting their community?

Building an AI-Enhanced Democracy, Not an AI-Controlled One

The integration of AI in local democracy is inevitable—but its form is still being determined. The choices your community makes today about AI governance will shape civic life for decades.

The Path Forward Requires Balance

We shouldn’t reject AI in local democracy entirely. The efficiency gains, accessibility improvements, and analytical capabilities AI offers are too valuable to dismiss. However, we must insist on several non-negotiable principles:

Human-in-the-Loop Decision Making

AI should inform decisions, not make them. No consequential action affecting residents should result from a pure algorithmic recommendation without meaningful human review. This human oversight isn’t a bottleneck—it’s a safeguard ensuring that technology serves democratic values rather than replacing them.

Mandatory Transparency Standards

Citizens have a right to know when they’re interacting with AI systems and how those systems work. Local governments should be required to:

- Publicly disclose all AI deployments affecting residents

- Provide plain-language explanations of how algorithms make recommendations

- Allow independent audits of AI systems for bias and accuracy

- Create clear paths for challenging algorithmic decisions

Ongoing Community Engagement

AI governance can’t be a one-time decision. As systems evolve and new applications emerge, continuous community input is essential. Establish permanent civic technology advisory boards that include:

- Technical experts who can evaluate AI systems

- Community advocates representing potentially affected groups

- Legal scholars familiar with civil rights implications

- Ordinary citizens who bring common-sense perspectives

Consequences for Manipulation

Weaponizing AI to corrupt public comment processes should carry serious penalties. Cities should adopt ordinances that:

- Prohibit submitting AI-generated public comments without disclosure

- Impose significant fines for organizations caught using bot networks to simulate grassroots support

- Require lobbyists to certify that communications come from actual constituents

Your Voice Still Matters—But You Must Use It

The question isn’t whether AI will play a role in local democracy—it already does. The question is whether ordinary citizens will shape how that technology is deployed or whether decisions will be made behind closed doors by tech vendors and officials seeking efficiency above all else.

Every town hall meeting where AI deployment is discussed without citizen input, every algorithm deployed without transparency, every public comment system compromised by automated submissions—these represent small abdications of democratic participation. Individually, they seem minor. Collectively, they could fundamentally alter the relationship between citizens and their government.

The integrity of AI in local democracy depends on engaged citizens who refuse to let technology become a barrier between them and their representatives. Your participation matters now more than ever—not despite technological change, but because of it.

Frequently Asked Questions About AI in Local Democracy

1. How can I tell if a public comment is AI-generated?

Individual AI-generated comments are nearly impossible to identify with certainty—that’s precisely what makes them problematic. However, patterns may reveal manipulation: multiple comments with similar arguments but varied wording submitted within short timeframes, generic language that lacks specific local details, or commenters whose identities can’t be verified through public records. If you’re concerned, attend meetings in person where you can see who’s actually participating, and encourage your local government to implement verification systems for written submissions.

2. Is my city using AI right now without telling me?

Possibly. Many municipalities have adopted AI tools without formal announcements or public debate. Common applications include chatbots on city websites, predictive analytics for service requests, and automated screening systems for permits or benefits. File a public records request asking for all contracts with companies providing “artificial intelligence,” “machine learning,” or “algorithmic decision-making” services. Your city is legally obligated to respond, and the answer may surprise you.

3. Can AI actually improve civic engagement, or does it just create problems?

AI has legitimate potential to enhance democracy. Translation services can include non-English speakers, accessibility tools can help disabled residents participate, and summarization algorithms can help citizens understand complex policy documents. The key is ensuring these tools supplement human engagement rather than replacing it. AI should lower barriers to participation, not create new ones or enable manipulation.

4. What legal protections exist against algorithmic bias in local government?

Legal frameworks are still developing. The Fourth Amendment protects against unreasonable searches (relevant for predictive policing), the Equal Protection Clause prohibits discriminatory government action (applicable to biased algorithms), and various civil rights statutes may apply depending on the context. However, no comprehensive federal law specifically addresses algorithmic bias in local government. Several states and cities are developing their own regulations—New York City requires bias audits for automated hiring tools, for example—but coverage is inconsistent. This legal gap makes citizen advocacy even more crucial.

5. Should cities ban AI entirely to protect democracy?

Outright bans are neither practical nor desirable. AI offers genuine benefits for government efficiency and service delivery that ultimately serve citizens. The solution isn’t prohibition but regulation—requiring transparency, mandating bias testing, ensuring human oversight, and creating accountability mechanisms. Think of it like food safety regulation: we don’t ban food production because contamination is possible; we create standards and inspection systems. The same approach should apply to AI in local democracy, with citizens as the inspectors ensuring technology serves the public interest.

The conversation about AI in local democracy is just beginning. Stay informed, stay engaged, and remember that democratic participation isn’t a spectator sport—it’s a civic responsibility that technology should enhance, not replace.